Stop Demos, Start Doing: AI That Works on Your Data

Boom or Bubble?

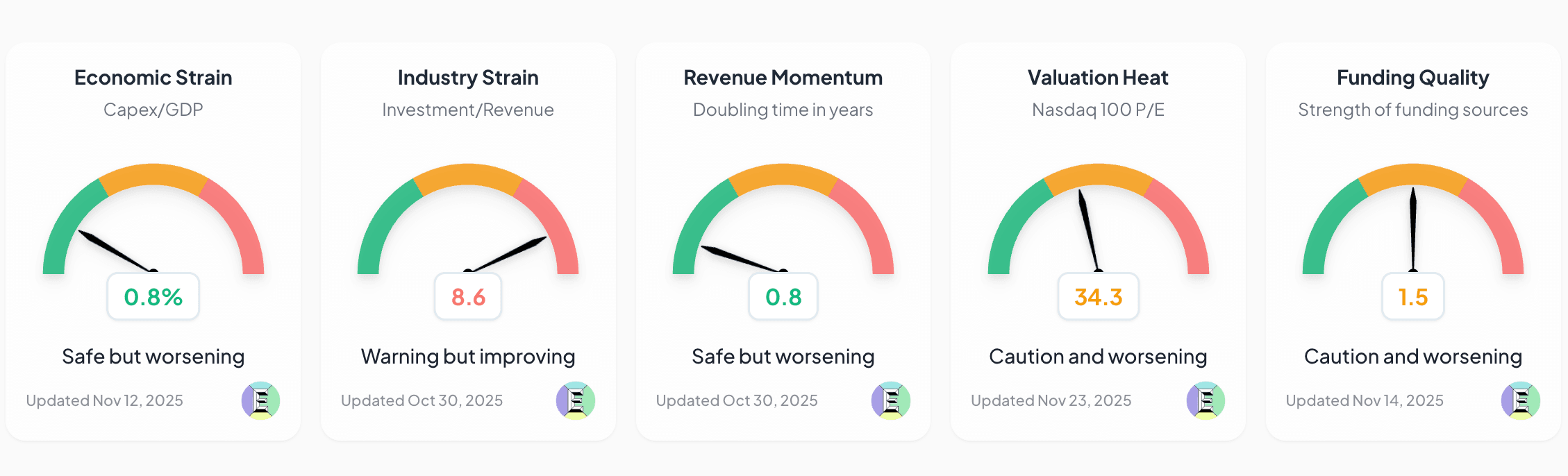

There has been quite a bit of discussion of late as to whether the current AI situation is a boom or a bubble, including Exponential View's very own https://boomorbubble.ai/, which includes these gauges:

(for what it's worth, that particular site-at the time of this writing-doesn't think it is, yet)

I am no economist, and my opinion doesn't have any relevance, though I will note that a bubble is a financial concept (asset prices rising beyond fundamentals), not a verdict on whether a technology will deliver. The dot-com era proves the point: internet stocks crashed around 2000, yet the internet itself became one of the most powerful engines of growth and job creation in modern history. So even if AI experiences a valuation bubble, that doesn’t settle the question of its long-term impact.

The ROI Reality Check

What is not under debate is the fact that AI has thus far not delivered on its promise. An MIT report summarized by Fortune finds that “95% of organizations are getting zero return" on their AI investment. It goes on to say that "adoption is high, but disruption is low." That MIT report also says that 95% of all AI pilots fail. Another article in Forbes claims that the failure rate is 85%. The Economist refers to "the AI trough of disillusionment."

What Nabu Does

At Nabu, we don’t build LLMs; we build the infrastructure that makes them useful. We provide agentic workflows, permissioned retrieval, evaluation, and operational controls so companies can deploy AI reliably, safely, and cost-effectively. Our aim is to move from hype to measurable ROI by turning models into production-grade products. And we know a platform alone isn’t enough. Teams also need the how: clear use cases, data readiness, evaluation methods, and a strategy for rolling AI into day-to-day workflows.

Jobs vs. Tasks

Despite all of the hiring freezes and massive layoffs at certain organisations (Reuters report), which almost certainly both have an element of AI as a reason (or excuse), I think AI as a replacement of jobs is premature. What I do think AI can do now is replace certain tasks that a role might be responsible for. And a job is essentially a collection of tasks and activities.

So a natural starting point for where to effectively leverage AI should be to capture the tasks/activities that a given role performs, and then to consider which ones to use AI to replace, without the intent of replacing the job itself.

Example Task Catalog: Financial Analyst

- Own monthly close variance analysis

- Prepare budget and rolling forecasts

- Create dashboards (revenue, margin, cash burn)

- Analyse cohort/LTV/CAC and unit economics

- Track headcount plans and compensation impacts

- Manage opex vs. budget; flag risks/opps

- Support board materials and KPI packs

- Evaluate vendor contracts; ROI and payback

- Maintain KPI dictionary and data definitions

- Coordinate audits and SOX/controls evidence

- Create ad hoc analyses for exec questions

- Automate reports; reduce manual work

- Document assumptions and version models

Example Task Catalog: HR Generalist

- Manage full-cycle recruitment with hiring managers

- Draft and post job descriptions; screen candidates

- Coordinate interviews; collect structured feedback

- Run reference checks and offer paperwork

- Onboard new hires (accounts, equipment, training)

- Administer benefits and leave programs

- Guide managers on performance and PIP processes

- Coordinate reviews, calibration, and promotions

- Maintain policies/handbook; communicate updates

- Run engagement surveys; report insights

- Manage visas/immigration with Legal

- Oversee offboarding and exit interviews

- Maintain org charts and headcount reporting

- Advise on compensation bands and offers

- Lead HR projects (DEI, wellness, recognition)

Picking AI-Candidate Tasks

To think AI is going to magically replace either of those jobs at this point in time is unrealistic. But finding opportunities for AI to perform some of those tasks is very attainable!

So a starting point, then, is to have employees create a list of the tasks that exist for a given role (do your best to keep this step agnostic of AI. If employees go into this exercise with the goal of AI replacing tasks, the responses will be prejudiced). Then, identify which tasks are currently AI candidates (e.g. Evaluate vendor contracts and maintaining policies) versus which are more of a stretch (e.g. Coordinate audits and SOX/controls evidence and managing visas/immigration with Legal).

Prioritise, Reuse, and Scale

From there, the next step is to determine if there are overlaps, where a single AI solution can be reused and create a higher return on investment, and/or determine which tasks are more valuable against the effort to create the solution. Creating an onboarding and offboarding solutions that lean on AI might be extremely valuable for a medium or large organisation who does a lot of hiring, or has a lot of turnover (perhaps due to seasonal employees, for instance). By automating those tasks with AI, the HR Generalist (in this fictitious example) can redirect the freed time to higher-value work.

Why Pilots Stall

Why do so many pilots stall? It’s rarely the model. It’s the unglamorous work around it: getting permissioned access to the right data, agreeing on a single source of truth for a task, and defining what "good" looks like so you can measure it. Most teams skip three essentials: (1) data readiness (knowledge hub connections, chunking, metadata, and access controls), (2) task design (a crisp specification with guardrails, fallbacks, and vitally, human-in-the-loop), and (3) evaluation (precision/recall, latency, cost, and business outcomes). Without these, pilots impress in a demo and then die in production because they can’t meet reliability, security, or cost targets.

What Good Looks Like

What does good look like? Pick one role and one narrow, high-volume task (e.g. variance-analysis commentary drafts or job-description generation). Create an agentic workflow by defining a small set of policies the agent must obey, and instrument everything. Run an experiment: current process vs. AI-assisted. If you don’t see cycle-time reduction, higher precision, or lower exception rates, fix the task or move on. If you do, productise: add observability, retries, and approval flows; publish a playbook; scale to adjacent tasks. Repeat this pattern until you’ve replaced a meaningful share of task-hours (not jobs!) with governed, measurable automation that actually ships.

From Pilots to Products

Even if valuations are frothy, the real issue is execution. Most enterprises aren’t getting ROI yet, which is why Nabu focuses on infrastructure and tooling. The wins don’t come from bigger models; they come from plumbing that lets those models work on your data safely and repeatable. That means authenticated connectors, clean metadata, permissioned retrieval, evaluation and observability, and run-time controls so agents do exactly what they’re allowed to do, and nothing else.

Start with work, not hype: map each role to its tasks, pick the few with clear payback, and wire an agentic workflow that’s grounded in your systems (not screenshots and wishful prompts). Measure outcomes the business cares about: cycle time, accuracy/precision, exception rates, cost per task (etc.), and promote only what hits thresholds into production. This is how you move from pilots to products, with strategic experiments that lead to measurable outcomes, stronger guardrails to turn general-purpose models into meaningful agentic workflows with access to your data.

That’s the narrative we want to change, from AI as spectacle to AI as dependable infrastructure that compounds value.

Nabu. AI for Everyone.